Drupal logging on Pantheon with Monolog and Logz

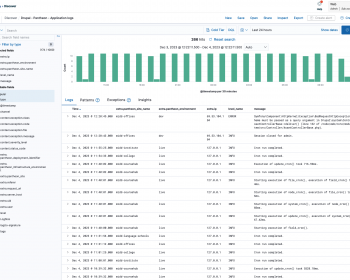

Background Since 2014, my team at Middlebury has been using the “ELK” stack (Elasticsearch, Logstash, & Kibana) to aggregate and analyze our application, webserver, PHP, and CDN logs. Over the years I’ve built dashboards and visualizations that allow us to monitor application health and performance, drilling into problem areas and…